Pre-virtualization

Introduction

Pre-virtualization is a virtual-machine construction technique that combines the performance of para-virtualization with the modularity of traditional virtualization. Modularity is the common factor in the applications of virtualization, whether server consolidation, operating system migration, debugging, secure computing platforms, or backwards compatibility. Many of these scenarios have strong trade-offs, e.g, security versus performance, and virtual machines easily address these scenarios and trade-offs via substitution of different hypervisors that are specialized for the scenarios. Pre-virtualization provides performance, but without creating strong dependencies between the guest operating system and the hypervisor; pre-virtualization maintains hypervisor neutrality, and guest operating system neutrality.

We offer two pre-virtualization solutions, VMI and Afterburning, both of which share a common runtime environment.

VMI

The Virtual Machine Interface (VMI) is a proposed interface for pre-virtualization initiated by VMware. VMI closely follows the hardware interface to enable operating systems and hypervisors to interoperate even when independently developed by non-collaborating developers. As of March 2006, the VMI interface has been manually applied to the Linux 2.6 kernel. We hope to see it officially adopted by Linux, the BSD family, Solaris, Darwin, etc., thus enabling high performance virtualization on any hypervisor that implements the VMI interface.

We have almost completed our support for the latest VMI interface; it requires a few changes to our runtime, and will support Xen v2 and Xen v3. We'll release as soon as we have completed the changes.

Afterburning

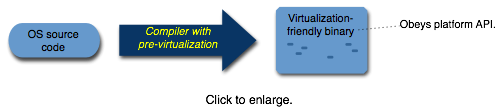

Our Afterburner project automates the application of pre-virtualization to a guest operating system. The automation brings quick access to a high-performance virtualization environment, especially for kernels that are no longer maintained and thus unlikely to receive the attention necessary for manual application of VMI. Some aspects of our interface still require manual application, but we have solutions to reach full automation, and only need to implement them.

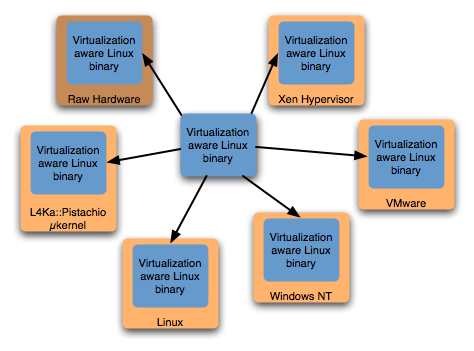

Our Afterburner produces binaries that execute on raw hardware, our L4Ka::Pistachio microkernel, and the Xen hypervisor (v2 and v3). The Afterburner automatically locates virtualization-sensitive instructions, pads them with scratch space for runtime instruction rewriting, and annotates them. In the future we will adapt our automation to automatically apply the VMI interface, which also uses instruction padding and annotations. We support several versions of Linux 2.6 and Linux 2.4, and can easily track new versions of the kernel.

One advantage of our automated approach is that it works for devices too. For example, we automatically instrument the DP83820 gigabit ethernet driver, so that when executing in a virtual machine, we have very high-performance virtualized networking while using Linux's standard DP83820 network driver. Typical virtual machine environments write a custom network device driver for the guest OS to obtain high performance; since we automate the virtualization, we quickly enable high-performance virtual machines for a variety of kernels, particularly older kernels.

In-Place VMM

The core runtime used by both VMI and Afterburning is our in-place VMM (IPVMM), also known as the wedge, or the ROM. The IPVMM translates the low-level platform interactions of the guest OS into the high-level interface of the hypervisor. For performance reasons, the translation takes place within the guest kernel's address space. The IPVMM is specific to the hypervisor, and is neutral to the guest OS since it emulates the well-defined platform interface (processor instruction set, and platform devices). We have implemented an IPVMM for the L4Ka::Pistachio microkernel, the Xen v2 hypervisor, and the Xen v3 hypervisor. A single Linux kernel may run on any of these hypervisors. We are additionally developing an IPVMM to run as a Window's native application, and some small effort was put into an IPVMM to run as a Linux application.

News

We no longer use a customized binutils for afterburning; now we have a dedicated Afterburner utility that parses and rewrites the assembler (based on Antlr). We added support for Xen 3, although it is not thoroughly tested. We added support for more versions of Linux 2.6, and we added support for Linux 2.4. We also added an APIC device model.

Supported Systems

Hypervisors: L4Ka::Pistachio, Xen 2.0, Xen 3.0, Windows NT (in development)

Guest operating systems: Linux 2.6, Linux 2.4

Itanium: Itanium support has been implemented at UNSW for three hypervisors: vNUMA, Xen/ia64, and Linux.

Audience

The afterburning infrastructure is in active development, and targeted towards people interested in evaluating pre-virtualization solutions. It is a research project, and not yet a user-friendly product. A project such as this requires experimentation and time to find best practices; the result will be a superior user-friendly product.

Licensing

Most of the source code is authored by and copyrighted by the University of Karlsruhe, and is released under the two-clause BSD license. The remaining source code has been imported from other open source projects. It is our goal that any runtime binaries are only subject to the BSD license, and as of 11 July 2005, the L4 and KaXen runtimes are completely BSD.

Getting Started

- Download

- README.txt - We provide an automated build system that downloads all packages, applies patches, configures options, and builds.

- Mailing list - Conceptual discussions and feature discussions are welcome. Support requests may go unheeded due to lack of resources. Our goal is to ensure that support is unnecessary.

Background Material

- Pre-Virtualization: Soft Layering for Virtual Machines - A technical report, which provides a principled approach to modififying operating systems for executing on hypervisors. July 2006.

- Pre-Virtualization: Uniting Two Worlds - The single-page abstract that accompanied our SOSP poster. October 2005.

- Pre-Virtualization: Slashing the Cost of Virtualization - A technical report, which compares pre-virtualization to para-virtualization (and traditional virtualization) from a cost perspective. November 2005.

- Implementation guide (957kB PDF) - A presentation describing the internals of the implementation. March 2006.

- White paper (23kB PDF) - Two page motivation and technical overview. 6 April 2005.

- Slide presentation (793kB PDF) - Walks through motivation, concepts, and explains technical aspects. 22 September 2005.

Limitations

The infrastructure is in active development, and isn't completely mature. We haven't released our support for reusing device drivers.

Note: using a VGA console isn't really tested. It is best to use a serial port as the console, although the serial port support is still incomplete. For true interaction, use an ssh connection to the guest OS.